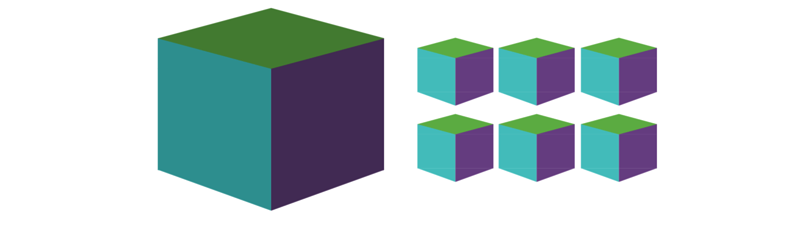

Splitting large files into smaller chunks

I remember when I was young, in the old days when the first mp3 appeared, we needed to move files (usually 3mb of size) in floppy disks (1,44mb) because CD/RW was not so affordable at that time.

In order to do that we used a Windows program called Hacha (Axe), this program was able to split a large file into smaller pieces and later able to join this pieces again, so we used to split a song in 3 pieces and we used 3 floppy drive to move the song.

Today we have large capacity devices like portable hard drives, flash drives where we can move large things, however is common for a flash drive to be formatted in fat32 where the maximun capacity for a file is 4gb. The same technique can be used for such case, for example to move a large database dump, but in a Unix like OS we don’t need anything else besides a command line.

You can find the command split to split a file and you can use cat to join the file later.

use

split -b 1000m MYBIGFILE

to split a MYBIGFILE into pieces of 1000mb

and later use

cat x?? > MYBIGFILE

To combine the pieces (xaa, xab, xac …) into the large file called MYBIGFILE